Navigating Global Regulatory Expectations for AI-Enabled Medical devices

The landscape of artificial intelligence in medical devices has transformed dramatically over the past five years, with regulatory bodies worldwide scrambling to establish frameworks that balance innovation with patient safety. As AI technologies become increasingly sophisticated and autonomous, manufacturers face the complex challenge of navigating diverse regulatory expectations across multiple jurisdictions while maintaining competitive advantage in this rapidly evolving market.

The global regulatory environment presents a patchwork of approaches, each reflecting different philosophical perspectives on risk management and innovation promotion. The United States Food and Drug Administration has emerged as a leader in pragmatic AI regulation through its Total Product Lifecycle approach, culminating in the December 2024 finalization of guidance on Predetermined Change Control Plans for AI-enabled device software functions. This framework allows manufacturers to predefine algorithm update parameters during premarket submission, enabling post-approval modifications within approved safety boundaries without requiring additional regulatory submissions for each change.

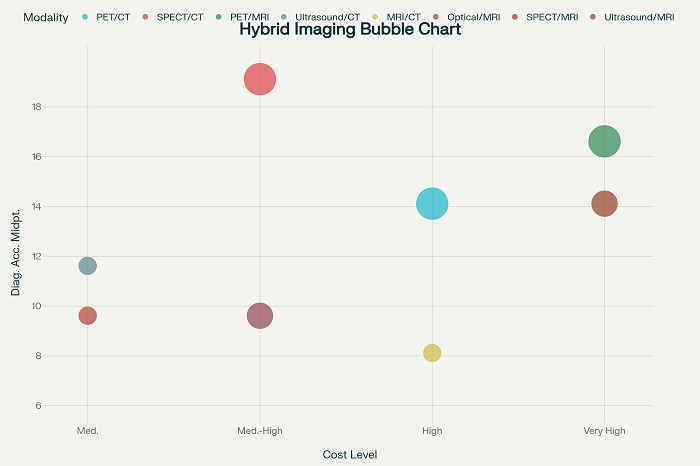

Global regulatory timeline for AI-enabled medical devices showing key compliance milestones by region

Global regulatory timeline for AI-enabled medical devices showing key compliance milestones by region

Regional Approaches and Strategic Implications

The European Union has adopted the most comprehensive regulatory stance through the dual application of the Medical Device Regulation and the newly implemented Artificial Intelligence Act. Under this framework, AI-enabled medical devices are classified as high-risk AI systems, subjecting them to stringent conformity assessment procedures that extend beyond traditional medical device requirements. The AI Act mandates comprehensive risk management systems, rigorous data governance protocols, human oversight mechanisms, and detailed technical documentation that demonstrates compliance with both safety and ethical standards.

This dual compliance burden creates significant challenges for manufacturers seeking European market access. Unlike the FDA’s flexible PCCP approach, the EU framework requires that substantial algorithm modifications undergo complete re-evaluation through notified body assessment processes. The August 2026 implementation deadline for high-risk AI systems under the AI Act has created urgency among manufacturers to align their quality management systems with these enhanced requirements.

The United Kingdom has charted a distinctive course through its AI Airlock initiative, which emphasizes real-world evidence gathering and collaborative regulatory development. This approach reflects the UK’s post-Brexit strategy of maintaining competitive advantage through regulatory innovation while ensuring patient safety. Similarly, Japan and South Korea are developing risk-based frameworks that attempt to balance innovation promotion with safety assurance, incorporating unique requirements for AI interpretability and cybersecurity compliance.

China’s approach emphasizes data localization and state oversight, requiring manufacturers to conduct localized clinical trials and obtain specific approvals for algorithm performance evaluation. This creates additional complexity for global manufacturers who must develop region-specific validation strategies while maintaining consistent product quality across markets.

Implementation Challenges and Compliance Strategies

The fundamental challenge for manufacturers lies in managing the inherent tension between the adaptive nature of AI systems and traditional regulatory frameworks designed for static medical devices. Machine learning algorithms continuously evolve through exposure to new data, potentially altering their performance characteristics in ways that traditional change control processes cannot adequately address.

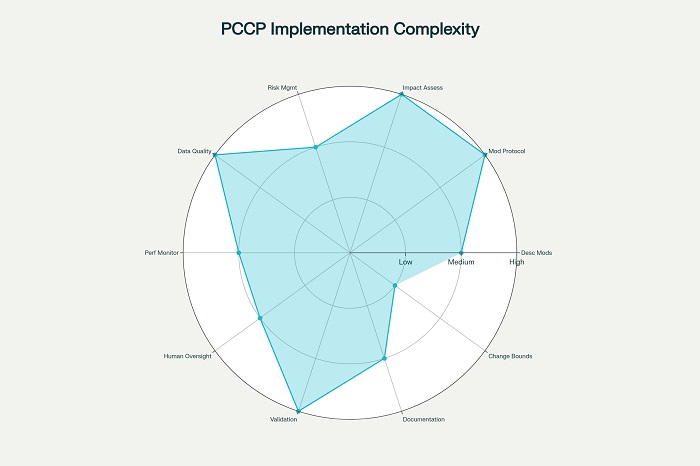

Implementation complexity assessment for Predetermined Change Control Plan (PCCP) requirements

The FDA’s PCCP framework represents the most mature approach to this challenge, requiring manufacturers to define three essential components: description of anticipated modifications, modification protocols for development and validation, and impact assessments of planned changes. However, implementing effective PCCPs demands sophisticated technical capabilities in algorithm validation, data governance, and risk assessment that many organizations are still developing.

Data quality management emerges as perhaps the most complex aspect of AI device regulation across all jurisdictions. Manufacturers must demonstrate that training datasets are representative, unbiased, and traceable while maintaining compliance with varying data protection requirements. The European AI Act’s emphasis on algorithmic fairness and non-discrimination adds additional layers of complexity, requiring ongoing bias monitoring and mitigation strategies throughout the device lifecycle.

Human oversight requirements present another universal challenge, with regulators worldwide emphasizing the need for meaningful human control over AI-driven clinical decisions. This requirement must be balanced against the desire to leverage AI’s full capabilities while ensuring that human oversight mechanisms do not become mere formalities that compromise patient safety.

Strategic Recommendations for Global Compliance

Successful navigation of this complex regulatory landscape requires strategic planning that anticipates future harmonization efforts while addressing current jurisdictional differences. Organizations should prioritize development of robust quality management systems that can accommodate the most stringent requirements across target markets, using the EU AI Act as a baseline for global compliance strategies.

Investment in explainable AI technologies will become increasingly important as regulators worldwide emphasize transparency and interpretability requirements. Manufacturers should also develop comprehensive post-market surveillance capabilities that can demonstrate real-world performance across diverse patient populations and clinical environments.

The establishment of regulatory science capabilities within organizations will prove essential for managing ongoing compliance obligations. This includes developing expertise in algorithm validation methodologies, bias detection and mitigation strategies, and risk assessment frameworks that can adapt to evolving regulatory expectations.

As the regulatory landscape continues to mature, the goal of global harmonization remains elusive but increasingly necessary. The target date of 2027 for meaningful international coordination reflects the urgency of establishing consistent standards that can support innovation while ensuring patient safety across all markets. Organizations that invest early in comprehensive compliance strategies will be best positioned to capitalize on the transformative potential of AI in healthcare while meeting their regulatory obligations across all jurisdictions.